Using Docker to explore Airflow and other open source projects

I’m in love with the idea that open source software has, and will continue to, change the world as we know it. It’s amazing how much of the…

I’m in love with the idea that open source software has, and will continue to, change the world as we know it. It’s amazing how much of the society we live in today is built on top of free resources that are accessible to the public. It’s easy to take for granted, but when I try to explain the concept to a friend who knows nothing about the software world, it blows their mind.

But open source software grows at an overwhelming pace, and reading about a piece a software is not the same as trying it out. Recently, I’ve been using docker to speed through testing out new pieces of software that I’d like to try, and since it’s worked nicely for me I thought it could help some people on here.

Before we start

You’ll need to install docker

You’ll need to know your way around a command line — and some git

Choose a project

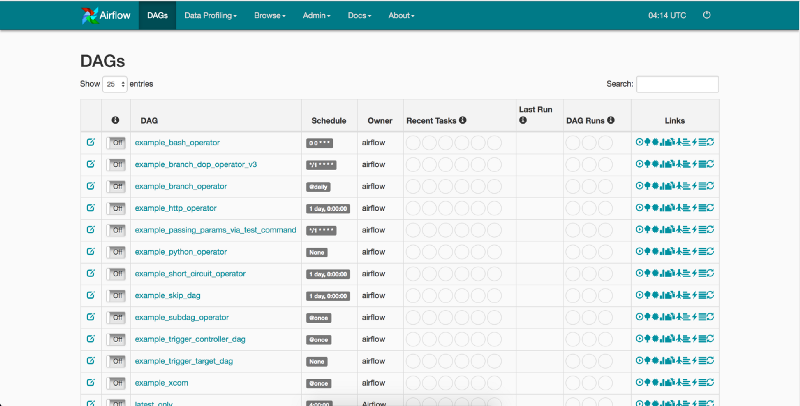

I’ve been meaning to checkout Airflow, formerly an open source project at Airbnb, now Apache. Airflow is a Python project, but I also knew it has a webserver component (saw the dashboard screenshots online), and I knew it must have some kind of database solution built in. So I jumped to google to see if there is a dockerized version of Airflow I could use that combined all the working pieces, and voila 1st result for an “airflow docker” search: https://github.com/puckel/docker-airflow.

Coding Start

Start by cloning the repository containing the dockerfile onto your local machine.

$ git clone https://github.com/puckel/docker-airflowThe docker-airflow repo has a Dockerfile you can edit to add extra packages, or to change the python version for example. This is where you can play around to see what kind of environment you can expect in a production deployment of Airflow. This kind of insight is something I find critical to understanding the dependencies of a technology, which helps me decide whether I’m willing to deploy it.

After checking out the docker file you can build the image using:

$ docker build --rm -t puckel/docker-airflow .Before moving forward, you might be thinking that it will be difficult to remember such a long command if you want to come back to this tech maybe a few weeks later to start where you left off (we all get distracted). At this point, I recommend starting a Makefile in your directory with these commands saved as targets. For example:

Once you save this Makefile into the directory you can spin up the docker container using:

$ make runAfter the image builds and the container starts, you can checkout the Airflow GUI using http://localhost:8080

If you saved the makefile above, you can run make tty to open up a shell in the container you just started.

I usually start with a ps to inspect the processes that are running within the container.

airflow@862f352a1c66:~$ ps -eo pid,cmd

PID CMD

1 /usr/local/bin/python /usr/local/bin/airflow webserver

16 /bin/bash

32 gunicorn: master [airflow-webserver]

139 [ready] gunicorn: worker [airflow-webserver]

143 [ready] gunicorn: worker [airflow-webserver]

147 [ready] gunicorn: worker [airflow-webserver]

151 [ready] gunicorn: worker [airflow-webserver]

156 ps -eo pid,cmdWe can see that the first command run within the container is the Airflow python webserver binary — found in /usr/local/bin/ .

We can cd and ls our way to the /usr/local/bin/ directory, and do some exploring. See if you can find the flask binary, as well as the gunicorn and celery binaries.

Jumping back into the output from the ps command, I can see that the webserver is running gunicorn, with 4 workers. There is probably a configuration file that is controlling the amount of workers to spin up, and I’d like to take a look at what other configuration options there are.

By running an ls in the home directory, I can see there is an airflow.cfg file. So I run a cat airflow.cfg | grep worker and sure enough there is a line that states:

# Number of workers to run the Gunicorn web server

workers = 4Usually configuration files within a Docker image are easy to change, to fit the needs of the user. Within the Docker file itself, there is a COPY command which copies files from the source directory into the container’s filesystem.

...COPY config/airflow.cfg ${AIRFLOW_HOME}/airflow.cfg...Let’s say I want to make some changes to this configuration file and spin-up a new container with less Gunicorn workers. In a new shell on my host, I use the command from the Makefile above to kill all my running containers:

$ make kill

Killing docker-airflow containers

docker kill 862f352a1c66

862f352a1c66I then go into the config/airflow.cfg file and I change the number of workers from 4 to 2.

Once that is saved and ready, you can run the Makefile targets for creating the container and opening up a shell using make run and then make tty. After running ps you should see only 2 Gunicorn workers running.

airflow@7bf9a1574369:~$ ps -eo pid,cmd

PID CMD

1 /usr/local/bin/python /usr/local/bin/airflow webserver

17 /bin/bash

32 gunicorn: master [airflow-webserver]

37 [ready] gunicorn: worker [airflow-webserver]

38 [ready] gunicorn: worker [airflow-webserver]

46 ps -eo pid,cmdWhat have we accomplished so far

Following the steps above, within a span of a few minutes you would have:

Downloaded and bootstrap and entire Python environment ready to run a popular open source package, Airflow.

Created a Docker container that was running an Airflow webserver.

Inspected the important processes that power the Airflow project.

Changed some settings in the Airflow configuration file, and rebooted the container.

Next Steps

I recommend playing around with the docker compose configuration files within the repo, to see how a more production capable version of Airflow would be running in a larger company. The steps are not much different from using the standard single container, but in the end you can run an entire application ecosystem, including a full version of Postgres and Celery, all on your local machine.

I hope that you find this exploration useful, and that you use Docker as your first step when exploring new open source projects you’ve been hearing about. I’ve found that ten minutes of hands on experience can teach you more about a new open source project you’ve been hearing about, more than any other resource.